2025 - Center for AI Safety & Scale AI

Humanity’s Last Exam

Co-author on Humanity’s Last Exam (HLE), a frontier multi-modal benchmark of 2,500 closed-ended academic questions designed to remain challenging for state-of-the-art LLMs across mathematics, the natural sciences, and the humanities; contributed graduate-level math problems to the benchmark’s question pool.

TL;DR

Co-author on Humanity’s Last Exam (HLE), a frontier multi-modal benchmark of 2,500 closed-ended academic questions designed to remain challenging for state-of-the-art LLMs across mathematics, the natural sciences, and the humanities; contributed graduate-level math problems to the benchmark’s question pool.

Research Note

Overview

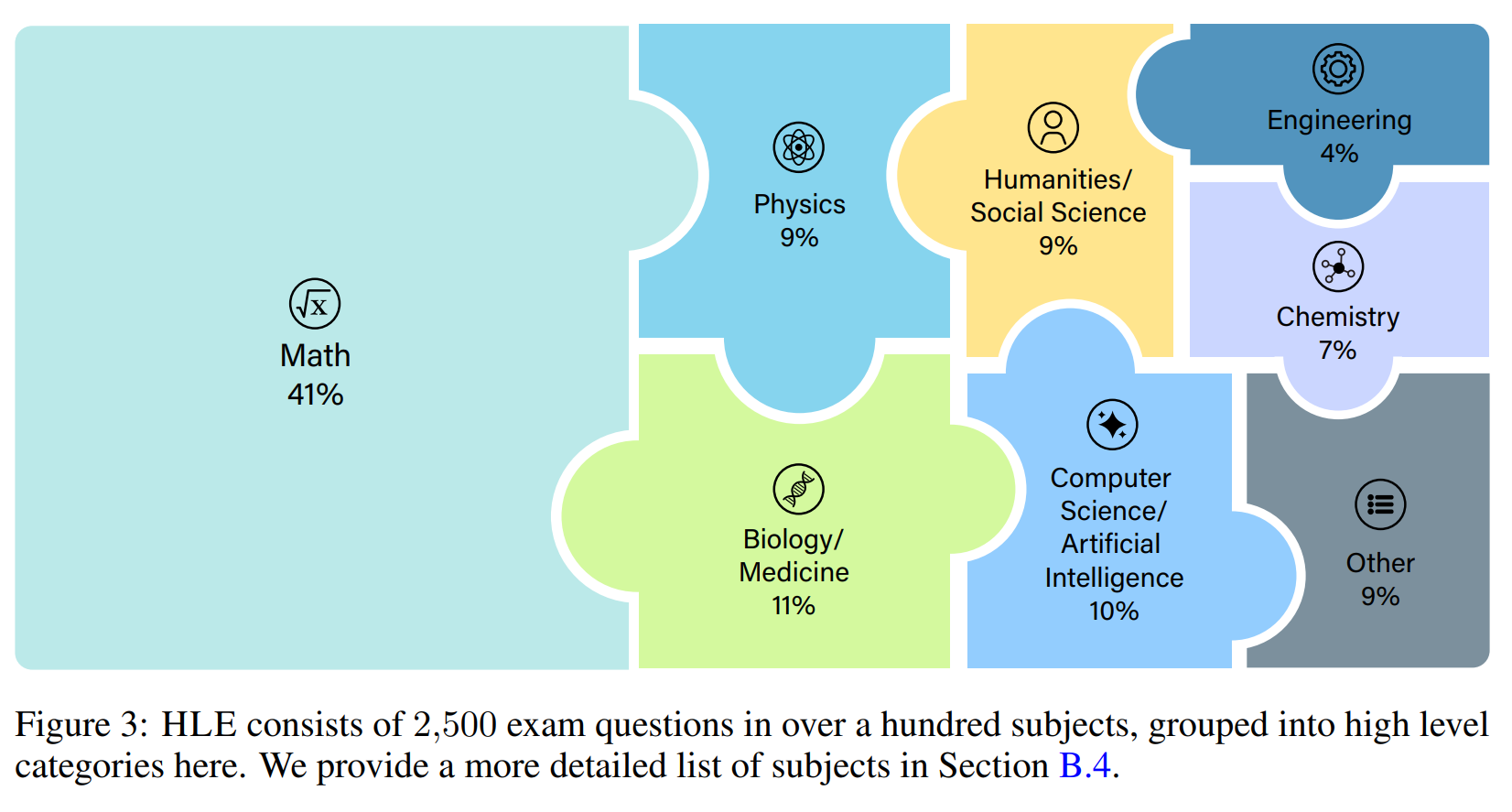

Humanity’s Last Exam (HLE) is a frontier benchmark designed to stress-test large language models on expert-level, closed-ended academic questions. The benchmark contains 2,500 publicly released items authored by domain experts across a wide range of subjects, including:

- mathematics,

- physics and other natural sciences,

- biology and medicine,

- humanities and social sciences,

- computer science, engineering, and related fields.

Each question is constructed to have a single, objectively checkable answer and to resist trivial web lookup or pattern memorization. The dataset includes both multiple-choice and short-answer formats, and a subset of questions is multi-modal, requiring models to combine textual reasoning with visual information.

HLE is jointly developed and maintained by the Center for AI Safety and Scale AI as a long-term benchmark that remains challenging even as frontier LLMs improve over time.

Benchmark design and goals

HLE is positioned as a “last exam” in the sense that it aims to replace saturated benchmarks like MMLU with a more durable, harder set of problems. Its design emphasizes:

- Closed-ended evaluation

Answers are graded automatically via exact matching (with light normalization), which makes scores reproducible and easy to compare across models.

- Expert-level difficulty

Many items are closer to graduate-level or advanced undergraduate questions than to standard standardized-test problems, particularly in mathematics and the sciences.

- Breadth of coverage

Rather than focusing on a single domain, HLE spans dozens of subject areas, functioning as a general academic exam rather than a narrow task dataset.

- Frontier relevance

Current state-of-the-art models still leave a noticeable gap to human expert performance on HLE, making it useful as a capability barometer for the next few years.

Because of these properties, HLE is increasingly referenced in discussions of AI capability evaluation, policy, and safety, as it offers a concrete, public measure of how close (or far) frontier models are from expert human performance on rigorous, graded tasks.

My Role

I am listed as an author on Humanity’s Last Exam (HLE) with a focused contribution in the mathematics and biology sections. I contributed expert-level questions in these areas that:

- satisfy HLE’s constraints on being closed-ended and automatically gradable,

- were designed to be non-trivial for current LLMs,

- and passed the benchmark’s internal review and filtering pipeline before inclusion in the released question pool.

My role is thus question design within the broader author team, complementing my main research on LLM safety and evaluation by working directly on a widely used, high-difficulty benchmark.